Today I attended day one of the AI Infra Summit at the Santa Clara Convention Center. Around 4,000 registered which is up from 1,500 last year. By the way, infra stands for infrastructure. Speakers addressed what you need for AI.

I noticed a trend. Speakers used a few AI-related terms over and over like “inference” and “token.” They liked to say, “AI factories” instead of or more than just “data centers.” The term math or AI math was tossed around a lot. Tensordyne booth had a digital sign that went so far as to say, “AI is math.”

I decided to blog about the AI terms used by speakers that got my attention at AI Infra.

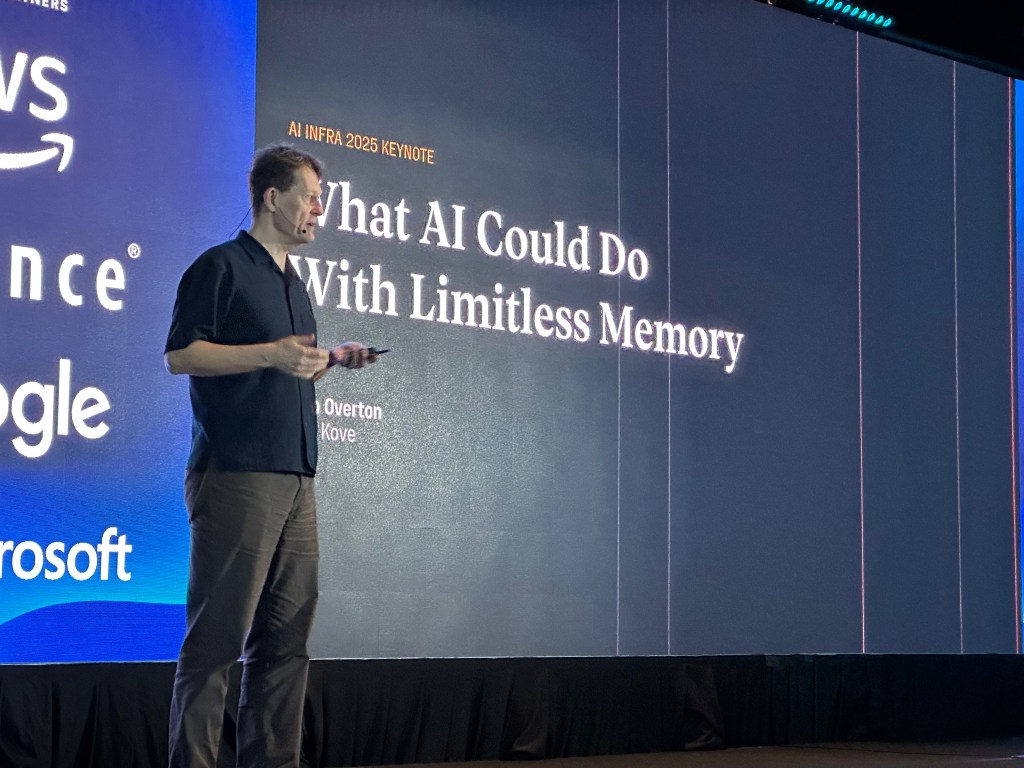

I sat through the press conference (photo below) featuring five companies, including two very young startups, as well as mainstage key notes by Meta, NVIDIA, AWS, Kove (unique software-based memory) and Siemens.

Thank you Royal Huang, PhD and CTO of SuperTech FT – a 5013c that teaches young people a practicum of physical AI and robotics – for checking my list of terms and commenting. Full disclosure is that SuperTech FT sponsored my conference attendance. Huang is an AI consultant who has worked in automobile robotics, health tech, edtech and more. (Royal is pictured below under the tiger in the AI Infra exhibit hall.)

Here’s my list of Top 10 AI Infra conference terms:

- INFERENCE: I heard this dozens of times and on many slides from the start of the press conference at 8:15 am right through to the last mainstage keynote speaker hours later. AI inference is the process of using a trained artificial intelligence model to generate predictions, insights or outputs from new, unseen data. Dr. John Overton, Kove’s CEO and a PhD, showed a slide that said, “Unlocking AI inference.” Kove innovates by making unique software-based memory.

- TOKENS: NVIDIA’s website says, “Tokens are tiny units of data that come from breaking down bigger chunks of information.” It adds, “The language and currency of AI tokens are units of data processed by AI models during training and inference, enabling prediction generation and reasoning.” Speaking of tokens, NVIDIA’s VP of Hyperscale, Ian Buck, PhD, announced a new GPU today, called Rubin CPX. It will be online by the end of 2026 and it will be able to handle “one million tokens” which apparently is a big deal. It reminds me of that Austin Power movie line, “1 million dollars.”

3. AI FACTORIES: Speakers said “AI factories” much more than data centers on their slides. Not new news but still interesting are Meta’s plans to build a ginormous data center that can handle very advanced AI. Today, one gigawatt which can power all of San Francisco is considered big. Yee Jiun Song, VP of Engineering, Meta, and also a PhD, mentioned in the mainstage keynote one that a five-gigawatt data center is planned! This will be called Hyperion and be the size of Manhattan.

Royal Huang commented on this topic, “Think of it this way. The data center is the soil and AI is the crop.”

These next three are more commonly used by business people:

4. LLMs: A lot of speakers talked about training large language models or LLMs.

5. AI Math: Today’s speakers said “math” several times. AI performs calculations. AI enables calculations. AI can save or cost a lot of money. There is a lot of math involved apparently. Recall that Tensordyne’s booth had a digital sign that said, “AI is math.”

I asked a person sitting next to me at lunch, Asif Batada, Sr. Product Marketing Manager of Alphawave Semi, if he thought math was important and used often in AI. He said, “Yes, math is like water,” and then switched the word to, “oil.” He elaborated, “Math is like oil; smart people are working on optimally using oil.” Interesting analogy.

6. GPUs and CPUs: A GPU is defined as a specialized electronic circuit designed to rapidly process and render images, videos and animations as well as do scientific computing, AI and machine learning. NVIDIA Rubin CPX (a future product) is a GPU. Fun fact: NeuReality’s CEO said during the press conference that you don’t want to cram too many GPUs together because that could cause performance to suffer. I guess there’s an assumption that more GPUs are better. He says, not necessarily. And a CPU is a semiconductor chip that acts as the brain of a computer.

These last words or terms are a bit overused but still valid:

7. OPEN SOURCE: Several mentioned that their product worked with many other brands of technology. Open computing is still a big deal.

8. SCALE: AI Consultant Huang advised that, “Everyone says they scale. But scaling is the toughest thing to do.” I’m not a huge fan of this term for this reason. Almost everyone in tech claims they “scale.” It’s better to give the proof as opposed to just stating the claim.

9. SAVING ENERGY and driving efficiency: Everyone mentioned this. A lot. Huang commented, “When you build a data center everyone is after being energy efficient.”

10. NVIDIA, the only proper noun on the list. And as a bonus number 10, Anthropic. Many companies said that their product is used by NVIDIA or they have been working with them. The AWS speaker name dropped working with both NVIDIA and Anthropic.

Royal Huang commented that he thought AI agents, agentic AI or multi-agents should have been included in my top 10. However, I didn’t hear many speakers mention them today. I do recall AWS mentioning it. Huang added that edge AI was also important. He had planned to see many agentic AI talks at the AI Infra conference which goes through September 11th.

###

Michelle McIntyre is a Silicon Valley-based PR consultant and IBM vet. As a social media influencer and blogger, she’s sometimes invited to press conferences. She is attending AI Infra on behalf of SuperTech FT, a robotics non-profit that trains (mostly) young people to do ‘physical AI.’

Photos: Michelle McIntyre took all of the photos here. The stuffed animal booth give away is from a company called Xage Security.